这是一份试验设计与方差分析作业代写的成功案

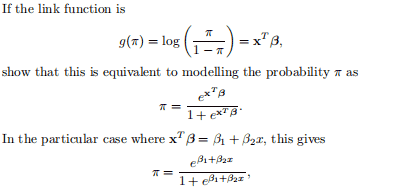

Consider maximization of the function $L(\mathbf{W}, \mathbf{H})$ in, written here without the matrix notation

$$

L(\mathbf{W}, \mathbf{H})=\sum_{i=1}^{N} \sum_{j=1}^{p}\left[x_{i j} \log \left(\sum_{k=1}^{r} w_{i k} h_{k j}\right)-\sum_{k=1}^{r} w_{i k} h_{k j}\right] .

$$

Using the concavity of $\log (x)$, show that for any set of $r$ values $y_{k} \geq 0$ and $0 \leq c_{k} \leq 1$ with $\sum_{k=1}^{r} c_{k}=1$,

$$

\log \left(\sum_{k=1}^{r} y_{k}\right) \geq \sum_{k=1}^{r} c_{k} \log \left(y_{k} / c_{k}\right)

$$

MSTAT 502/STAT 316/MTH 513A/MATH 321/STAT210/STA 106 COURSE NOTES :

For $m=1$ to $M$ :

(a) Fit a classifier $G_{m}(x)$ to the training data using weights $w_{i}$ –

(b) Compute

$$

\operatorname{err}{m}=\frac{\sum{i=1}^{N} w_{i} I\left(y_{i} \neq G_{m}\left(x_{i}\right)\right)}{\sum_{i=1}^{N} w_{i}}

$$

(c) Compute $\alpha_{m}=\log \left(\left(1-\operatorname{err}{m}\right) /\right.$ err $\left.{m}\right)$.

(d) Set $w_{i} \leftarrow w_{i} \cdot \exp \left[\alpha_{m} \cdot I\left(y_{i} \neq G_{m}\left(x_{i}\right)\right)\right], i=1,2, \ldots, N$.

Output $G(x)=\operatorname{sign}\left[\sum_{m=1}^{M} \alpha_{m} G_{m}(x)\right]$.